Upcycling Models under Domain and Category Shift

Sanqing Qu, Tianpei Zou, Florian Röhrbein, Cewu Lu, Guang Chen*, Dacheng Tao , Changjun Jiang

IEEE / CVF Computer Vision and Pattern Recognition Conference (CVPR), 2023

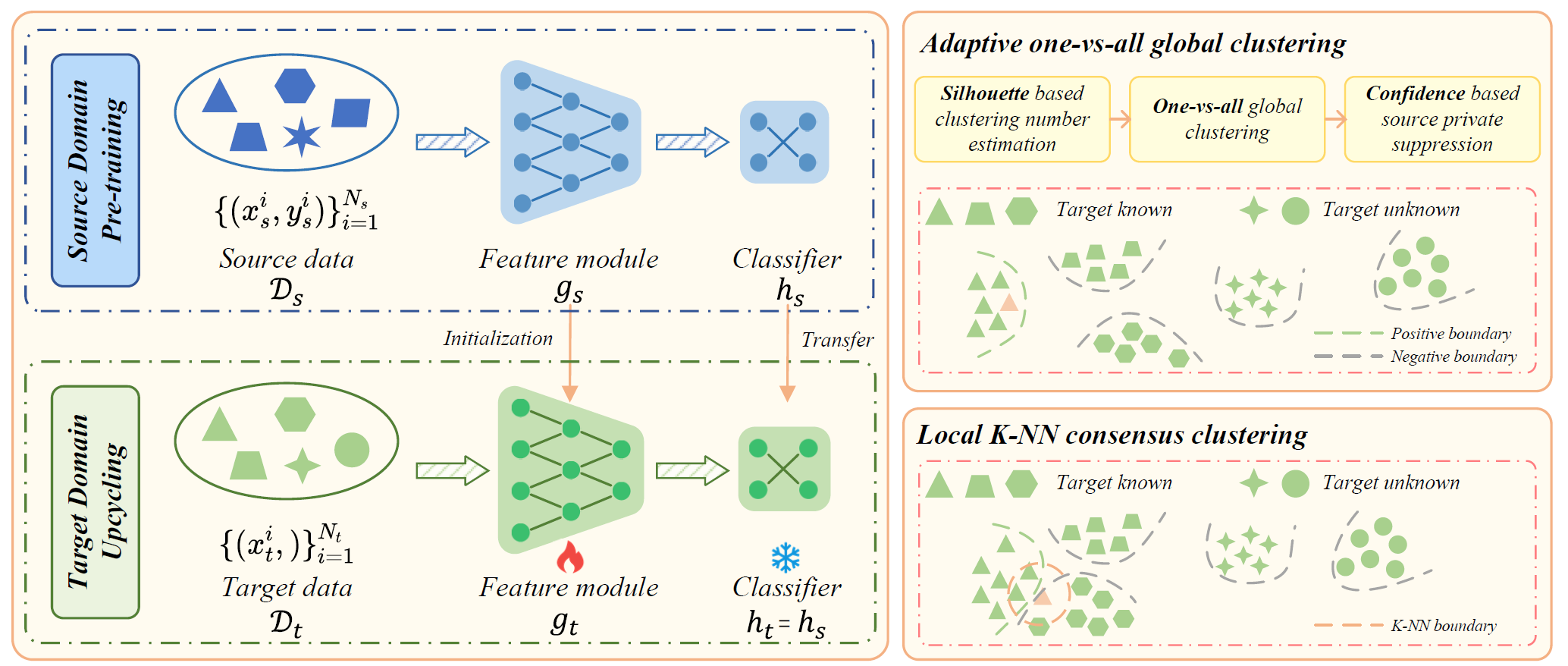

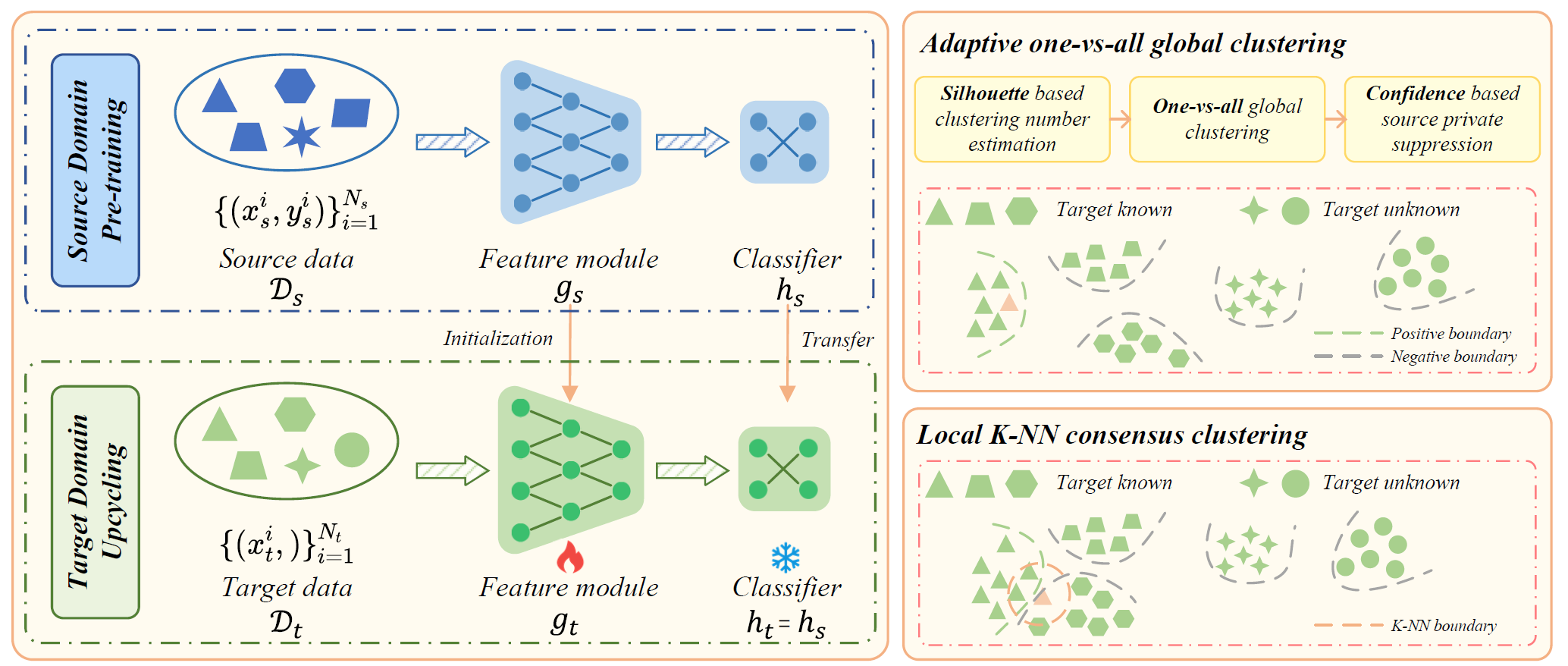

Deep neural networks (DNNs) often perform poorly in the presence of domain shift and category shift. To address this, in this paper, we explore the Source-free Universal Domain Adaptation (SF-UniDA). SF-UniDA is appealing in view that universal model adaptation can be resolved only on the basis of a standard pre-trained closed-set model, i.e., without source raw data and dedicated model architecture. To achieve this, we develop a generic global and local clustering technique (GLC). GLC equips with an inovative one-vs-all global pseudo-labeling strategy to realize "known" and "unknown" data samples separation under various category-shift. Remarkably, in the most challenging open-partial-set DA scenario, GLC outperforms UMAD by 14.8% on the VisDA benchmark.

The illustration of the GLC framework is shown below.

Demo video