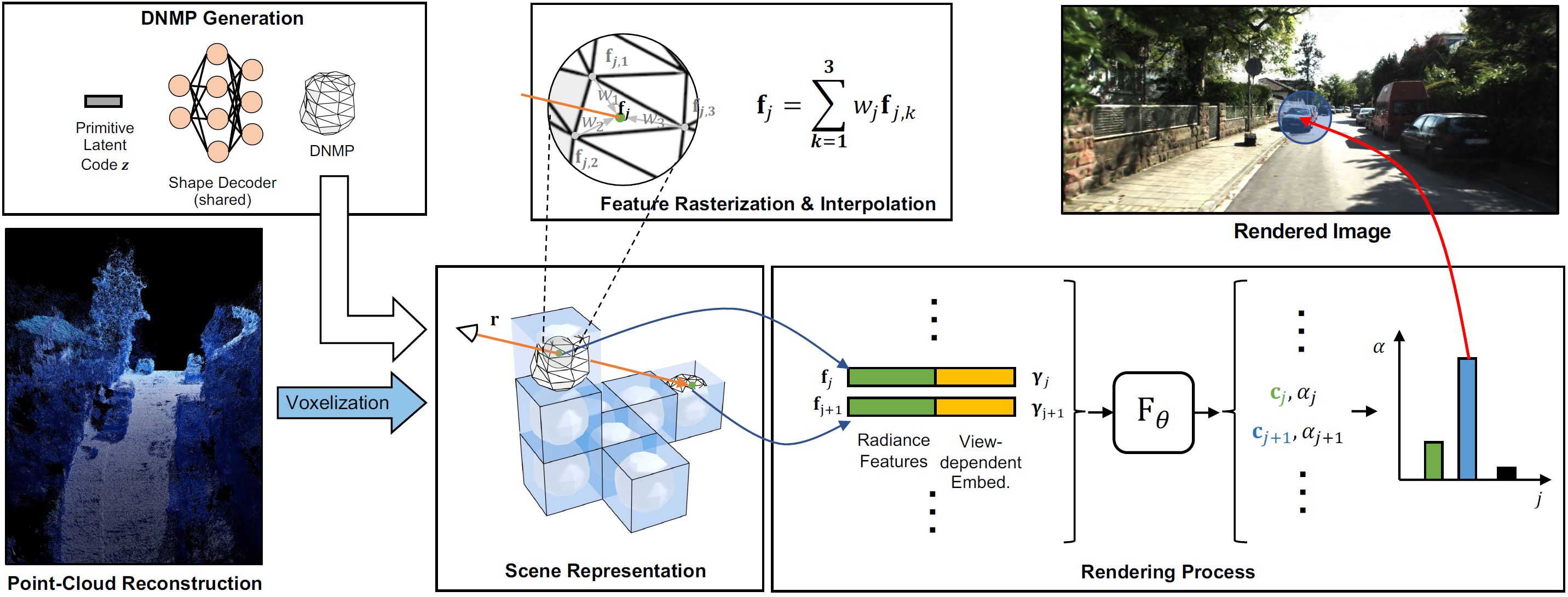

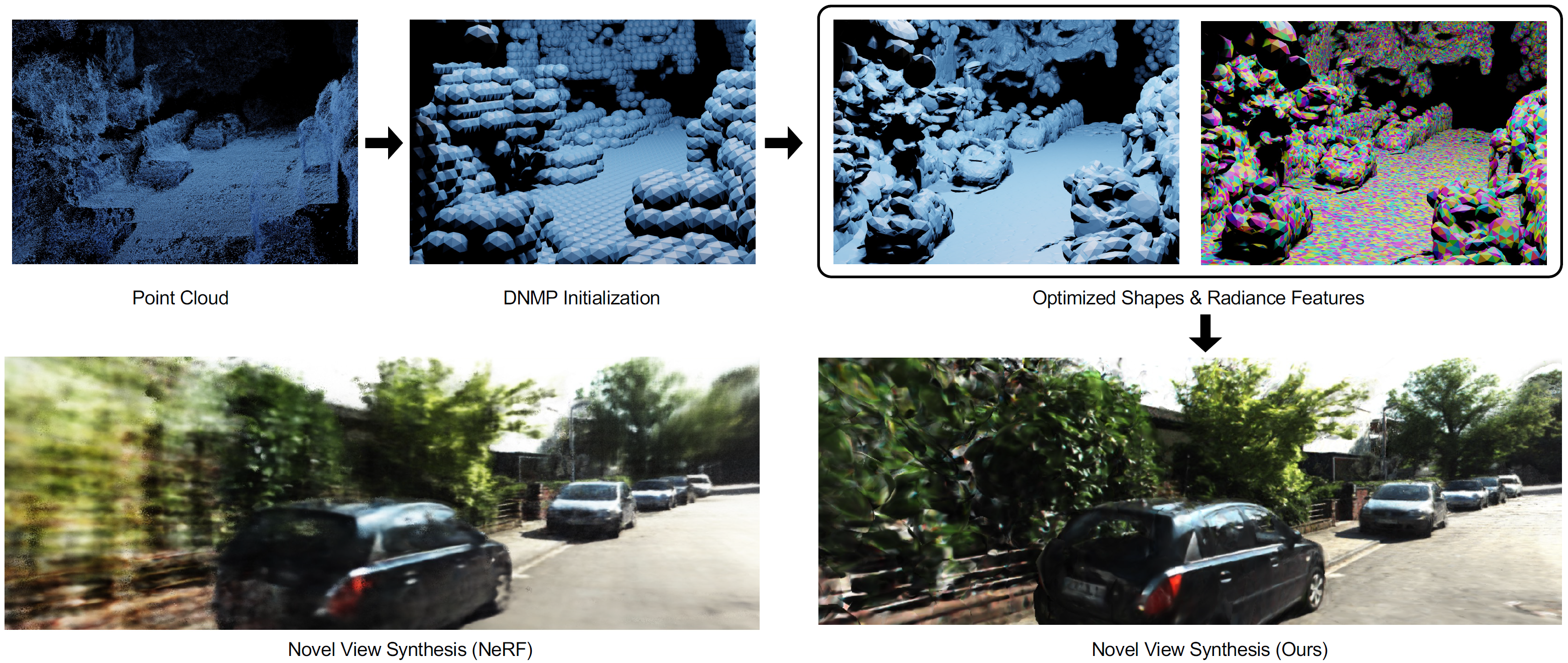

Taking the patchy point clouds as inputs, we first voxelize the points and then initialize our Deformable Neural Mesh Primitive (DNMP) for each voxel. During training, the shapes of DNMPs are deformed to model the underlying 3D structures, while the radiance features of DNMPs are learnt to model the local radiance information for neural rendering. Based on our representation, we achieve efficient and photo-realistic rendering for urban scenes.